Artificial Intelligence (AI) and Machine Learning (ML) mark a new era in fraud detection, empowering algorithms to be both proactive and predictive, spotting patterns and potential fraudulent activity before it happens.

However, as financial transactions embrace digital advancements, so do the tactics used by fraudsters trying to steal people’s information, identities, and money.

It’s crucial for organisations to continually refine their approaches and stay on top of the latest fraud trends and predictions to be able to detect and prevent fraud, as the same AI capabilities used for good can be manipulated for more sophisticated fraudulent activities, for example, the rise in generative AI fraud.

What is AI fraud detection?

AI fraud detection is the use of machine learning techniques to identify and prevent fraudulent activities by analysing large datasets and recognising patterns that indicate potential fraud. AI models learn from trends and can highlight suspicious attributes or relationships that may not be visible to a human analyst, but indicate a larger pattern of fraud.

How do AI and machine learning detect and prevent fraud?

Machine learning has become an invaluable tool in the fight against fraud, helping companies move from reactive to proactive by highlighting suspicious attributes or relationships that may be invisible to the naked eye but indicate a larger pattern of fraud.

Gemma Martin

When addressing the benefits of using machines to detect fraudulent activity, XYZ said: “The great value of machine learning is the sheer volume of data you can analyse, but selecting the correct data and approach is critical. Supervised learning, which incorporates prior knowledge of fraud tactics to guide pattern identification because it’s easy to teach the machine once there’s a clear target for it to learn.”

Unsupervised machine learning techniques can be used when guiding data for outcomes isn’t available. These anomaly detection models help pick up on unknown trends or aberrations in transactions, and predict smaller ‘pockets’ of information.

By combining the two, the system can recognise previous patterns of confirmed fraud, and raise the alert if a pattern of activity changes, increasing fraud detection rates and reducing false positives.

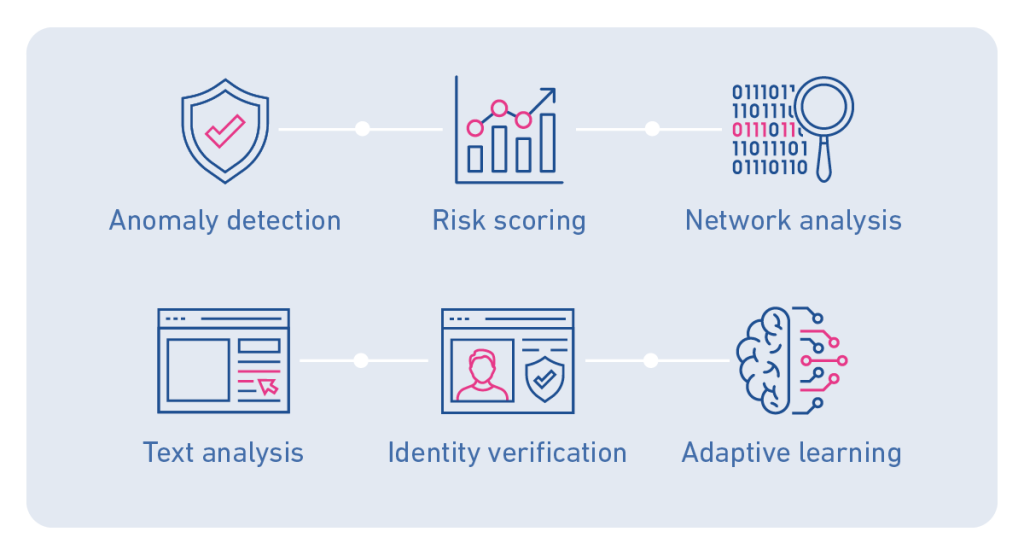

Some common applications of AI and machine learning in fraud prevention include:

Anomaly detection

Machine-learning algorithms identify unusual patterns or deviations from normal behaviour in transactional data. By training on historical data, the algorithms recognise legitimate transactions and flag suspicious activities indicating potential fraud.

Risk scoring

Machine-learning models assign risk scores to transactions or user accounts based on various factors, such as transaction amount, location, frequency, and past behaviour. Higher risk scores help prioritise resources and focus on specific transactions or accounts that warrant further investigation.

Network analysis

Machine-learning techniques, like graph analysis, uncover fraudulent networks by analysing relationships between entities and identifying unusual connections or clusters.

Text analysis

Algorithms analyse unstructured text data like social media posts and emails to identify patterns or keywords indicating fraud or scams.

Identity verification

Machine-learning models verify user-provided information, such as identification documents or facial recognition data, preventing identity theft.

Adaptive learning

Machine-learning adapts to new information, allowing models to stay up-to-date and detect emerging fraud patterns as tactics evolve.

Generative AI: an emerging and ever-evolving fraud thread

Generative AI, also known as Generative Adversarial Networks (GANs), has revolutionised various industries with its ability to create realistic and plausible data from someone inputting a few simple commands into a computer. However, the same technology that brings innovation and creativity also poses a significant threat. Some fraud challenges of Generative AI include:

- Deep fake technology: Deep fakes can generate hyper-realistic images, videos and audio recordings indistinguishable from authentic content. This technology has been misused to create fake news, misinformation, and even impersonation. Now, malicious actors can imitate someone’s voice with remarkable accuracy. The risks include impersonating individuals for financial gain, committing identity theft, or manipulating audio evidence in legal cases.

- Phishing and social engineering: Generative AI can be harnessed to create highly convincing phishing emails, messages, or even calls by mimicking the communication style of trusted entities. This elevates the sophistication of social engineering attacks, making it more challenging for individuals and organisations to discern between genuine and fraudulent communication.

The more Generative AI advances, the bigger the threat it poses. New techniques and applications are constantly emerging, requiring continuous efforts from cybersecurity experts to stay ahead of potential risks.

People, businesses, and policymakers must stay informed about the latest trends and capabilities, and develop robust strategies to counteract the associated risks.

Real use cases of AI for fraud detection

Just as Gen AI can predict the next word in a sentence – whether that be in texts, in emails, or when you’re inputting your search query online – machine learning models can predict the likelihood of someone being a fraudster. Each involves making predictions based on patterns and associations in data.

By employing innovative fraud detection techniques, AI is pivotal in safeguarding industries against malicious activity and financial loss. But what does that safeguarding look like?

- Crypto trace prototype: AI monitors blockchain transactions for suspicious activities in cryptocurrency’s decentralised and pseudonymous realm, identifying irregularities like rapid fund transfers or attempts to obfuscate fund origins.

- Scam detection chatbots: Integrated into online platforms, AI-powered chatbots analyse language patterns and user behaviour to detect scams, such as identifying phishing attempts, warning users about suspicious links, and reporting fraudulent accounts.

- Legitimacy analysis: AI models employing logic and natural language processing assess website legitimacy by analysing content, user reviews, and transaction history. This protects e-commerce users from fraudulent websites.

- Payment fraud detection: In banking, AI analyses real-time transaction data, quickly identifying unusual patterns, like multiple transactions from different locations, to prevent unauthorised access and financial loss.

- Credit card fraud detection: Similar to payment fraud, AI-driven algorithms analyse credit card transaction patterns, flagging potential fraud when cards are used for high-value transactions in unfamiliar locations.

- Fraud detection in banking: Banks utilise machine learning to comprehensively detect fraud by analysing customer behaviour, account activity, and transaction history, identifying irregular withdrawal patterns, unfamiliar login attempts, or sudden changes in spending behaviour.

- E-commerce fraud detection: AI is crucial in preventing fraud in e-commerce by analysing user behaviour, purchase history, and device information, flagging transactions that deviate from usual patterns, or detecting unusual login locations.

- Insurance fraud detection: Insurance companies use AI to analyse claim data, historical patterns, and various factors to detect inconsistencies or suspicious patterns, triggering further investigation for claims filed shortly after policy initiation or with discrepancies in incident details.

AI continually learns and adapts to new fraudulent tactics, offering a proactive and dynamic approach to safeguarding various industries helping them to keep their finances and data safe, maintaining that all-important trust between company and customer.

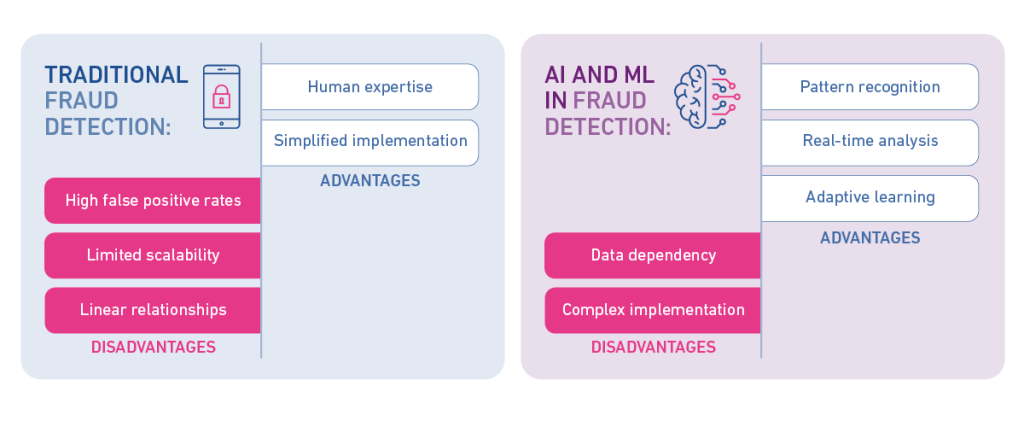

Traditional fraud detection vs. AI and ML

While AI and machine learning have introduced a paradigm shift in fraud detection capabilities, traditional methods still rely on rule-based systems and human intervention. But how do the two methods compare?

Traditional Fraud Detection:

Advantages

- Simplified implementation: Traditional methods often employ predefined rules (rule-based approach) to identify suspicious activities. This is straightforward to implement.

- Human expertise: Human analysts play a crucial role in traditional fraud detection, bringing domain expertise and intuition to the process.

Disadvantages

- Linear relationships: Rules-based systems operate on fixed relationships (if X then Y), and cannot interpret the many complex interactions between different data points and features.

- Limited scalability: Traditional systems may need help to scale effectively, especially as transaction volumes and complexity increase.

- High false positive rates: Rule-based systems leave little room for nuance and can therefore generate many false positives, leading to unnecessary investigations and customer inconvenience.

AI and ML in Fraud Detection:

Advantages

- Pattern recognition: AI and ML algorithms excel at recognising complex patterns, data point interactions and anomalies in large datasets, enabling more accurate fraud detection.

- Real-time analysis: AI allows for real-time analysis of transactions, providing a rapid response to potential fraudulent activities.

- Adaptive learning: ML algorithms can dynamically adapt to new fraud tactics through continuous learning, enhancing their effectiveness over time.

Disadvantages

- Data dependency: AI and ML models heavily rely on large, diverse datasets for training. There needs to be more accurate data to ensure the model’s accuracy.

- Complex implementation: Integrating AI and ML into existing systems can be complex and requires a significant initial investment.

Why should organisations of all sizes introduce machine learning to fraud detection?

Teams dedicated to fraud management across various industries, from banking and financial services, to retail and healthcare, confront escalating risk levels amid a convergence of threats. Our UK Fraud Index for the first quarter of 2022 indicated a surge in fraud, particularly in areas such as cards, asset finance, and loans, which saw the highest rate in three years.

Our 2022 UK&I Identity and Fraud Report underscores a 22% increase in fraud losses in the UK since 2021, with 90% of cases originating online. The report states, “AI and Machine Learning are now key technologies for online customer identification, authentication, and fraud prevention”.

While the exposure to these risks varies, larger, more sophisticated organisations may already possess the internal expertise, technological resources, and data access required to mitigate fraud risks. Younger and mid-market entities, including start-ups and scale-ups, may have less protection, rendering them more susceptible to risk.

It’s no longer enough to be reactive, teams need to be proactive and – where possible – predictive. This is where AI and ML can and are helping businesses stay ahead of the curve.

The challenges and solutions of AI and ML for fraud

AI and ML offer opportunities in fraud detection but also come with their own set of challenges. However, the dynamic nature of both typically offers solutions to all common challenges that arise.

Hallucination: addressing fabricated responses in AI models

Hallucination, where AI systems generate fictitious responses, poses a significant challenge for fraud detection in Artificial Intelligence and Machine Learning. Efforts are underway to mitigate this risk, with various techniques being explored to restrict language models from conjuring up inaccurate information.

Notably, during the pre-SAML and Open EO conferences, a commitment was made to support companies facing legal challenges related to copyright infringement. This financial assistance aims to aid organisations entangled in legal battles arising from deploying AI and ML models, acknowledging the risks and responsibilities associated with these technologies.

Bias and fairness: crucial considerations in AI and ML development

Fairness is paramount for developing and deploying effective AI and ML systems. Biases in data analysis can provide incorrect conclusions. It’s key that AI and ML frameworks do not discriminate based on factors such as sex, race, religion, disability, or colour.

Striking a balance between fostering innovation and maintaining fairness is essential. While regulations are necessary, they should not impede companies from securely conducting research. Failure to strike this balance could hinder the UK’s position in developing a globally leading AI sector.

Governance and data privacy: navigating the landscape of AI growth

The unprecedented volume of data available propels the growth of AI and machine learning. However, this surge in data raises concerns about governance and privacy. As the industry continues to harness data for AI advancements, robust governance frameworks are crucial to ensure ethical and responsible use.

How Experian helps you stay vigilant and informed

As we continue to unravel the complexities of financial security, it’s important to stay informed and vigilant.

To learn more about fraud prevention tools and deepen your understanding of the innovative technologies shaping the future of security, you can find a selection of handy guides on our website or speak with an expert today.